While everyone is busy finding out the right marketing strategy, very few are focused on the results. Therefore, most of the marketing campaigns fail without anyone even noticing it. This silent failure is linked to wasted time and budget. At BrandLoom, we see this happen often when businesses rely on assumptions instead of data-backed decisions. A/B testing strategies allow performance marketers to identify the best-performing campaigns and shift the budget flow towards them for maximum ROI.

A/B testing for performance marketers is an efficient way to understand which trick will work for you. Whether it is about tweaking a CTA button text or making the headline clickbait, you cannot rely on guesswork to maximize the output of your campaign. In this blog, you will learn everything about A/B testing BrandLoom’s performance marketing experts deploy and how it helps in driving conversions.

What Is A/B Testing In Marketing?

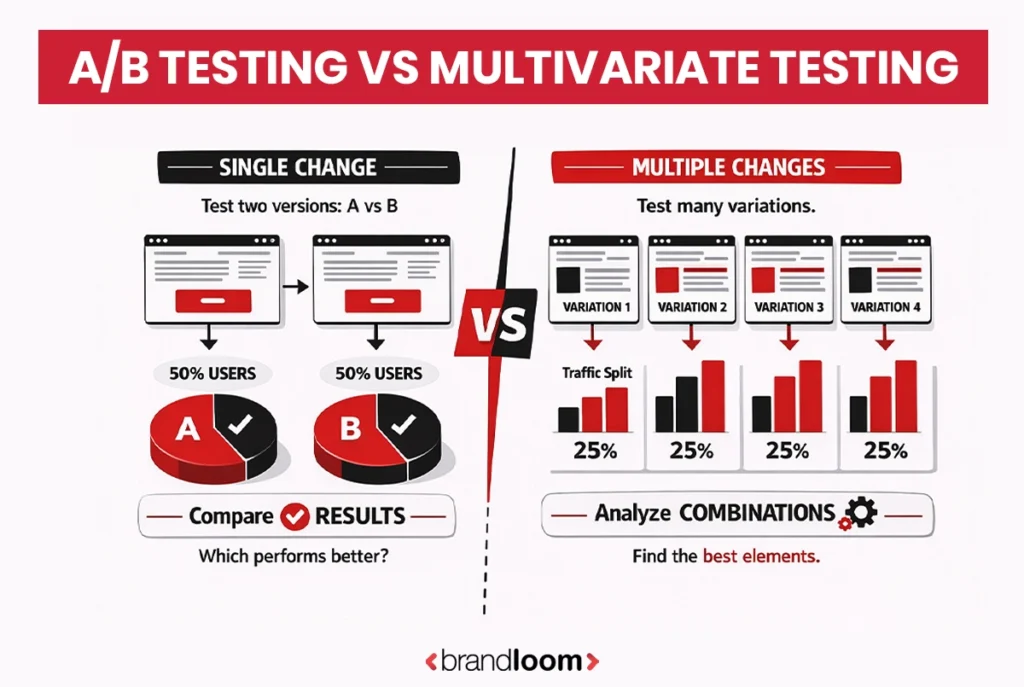

Not every decision can be made based on guessing games. A/B testing is a key marketing method that allows marketers to choose the best version between two potential choices. It is also known as split testing.

You want to run ad campaigns or optimize your landing page. A/B testing steps ensure the most effective version is identified to drive maximum conversions. The two versions, e.g., A and B, are created to meet the business goals. Therefore, it justifies the A/B testing meaning. On top of that, the target customers are utilized to find the best one.

The marketers show one version (A) to half of their audience and a slightly different version (B) to the other half. Then, it is tracked which one gets more clicks, sign-ups, or subscriptions. This simple user experience research method can save millions of wasted campaign budgets. Now that we know what is A/B testing in digital marketing, let’s find out how it differs from multivariate testing.

How A/B Testing Works in the AI-Driven Marketing Landscape

AI-powered marketing tools have widespread applications, and one of them is A/B testing, which now can be considered an advanced practice. Today, the use of AI in split testing is via the automation of experiments, the faster prediction of the winning variations, and finally, the precision with which the optimization effort of the business is scaled. At BrandLoom, we work with AI-driven testing to allow brands to speed up decision-making and get higher conversions with less guesswork.

Here’s how A/B testing works in the modern, AI-driven marketing ecosystem:

1. AI Automates Variation Creation

Instead of manually creating versions A and B, AI tools can automatically generate multiple variations of:

- Headlines

- CTA buttons

- Visual creatives

- Ad copies

AI analyzes historical performance patterns and produces versions designed to outperform previous results.

2. Smarter Audience Segmentation

Traditional A/B tests divide audiences randomly, which often leads to generic, surface-level insights. AI-driven A/B testing takes this a step further by analyzing behavior patterns, user intent, demographics, and past engagement history to understand who is most likely to respond to each variation.

AI clusters users into micro-segments based on how they browse, click, scroll, purchase, or interact with your brand. Instead of showing Variation A and B to completely random groups, AI ensures each version reaches the right audience segment of people whose behavior aligns with that specific variation.

This creates more accurate, deeply actionable results, because the test reflects how different audience groups naturally behave rather than relying on broad assumptions. Performance marketers can then craft highly personalized campaigns that resonate better and convert faster.

3. Real-Time Performance Prediction

AI has transformed A/B testing from a slow, manual process into a fast, automated, and highly accurate strategy tool. Today, AI quickly analyzes user behavior, identifies winning variations, and even predicts which version will perform best. Instead of testing two options for weeks, AI uses real-time data to optimize campaigns instantly. It personalizes content for different audience segments, improves engagement, and helps brands make smarter decisions with less guesswork.

4. Continuous Learning and Optimization

AI keeps learning even after the test ends. It studies user behavior, seasonal trends, platform changes, and competitor moves in real time. This continuous learning helps marketers refine campaigns constantly, not just during testing, leading to smarter decisions and better performance over time.

What Is The Difference Between A/B and Multivariate Testing?

While it may sound similar, multivariate testing is a completely different type of UX research method. Let us explore.

| A/B Testing | Multivariate Testing |

| 1. Two versions, e.g., A and B, of a single variant are tested. | 1. Multiple versions are tested simultaneously. |

| 2. There are two variants maximum (A and B). | 2. There are several variants representing all probable combinations. It may go up to 12 variants. |

| 3. Can start working with a smaller sample size. | 3. Without a large sample size, there will be no statistical ground. |

| 4. The traffic requirement is low to moderate. | 4. The traffic requirement is high. |

| 5. A/B testing is perfect for beginners. | 5. This testing method is ideal for experts. |

| 6. You can get results in days or weeks. | 6. The results may take up to months. |

What Does A/B Testing Involve?

Before taking up A/B testing best practices, it is important to learn about the associated factors. There are several steps involved in this workflow.

- A campaign is required for testing purposes. Be it an ad campaign or newsletters, it is important to determine the nucleus of your A/B testing.

- Goal setting is about defining the primary metrics and the elements to test. For example, you want to test the CTA button, the headline, or anything else. Also, you can forecast the percentage gap you are expecting. For instance, X% of sales increase.

- The testing element is of utmost importance. You should observe and determine whether you want to test the headline or the button background color. Then the entire process will follow around that.

Where A/B Testing Should Be Used?

A/B testing strategies are focused on boosting conversions as well as user experience. This method can be used for,

- Websites and landing pages (e.g., headlines, hero section, CTA buttons, forms, layouts, pricing displays, etc.)

- Search, display, and social ads (e.g., ad copies, visuals, landing page relevance, and CTAs)

- Email marketing (e.g., preheader text, subject lines, content layout, sender name, and send times)

- Mobile apps and push notifications (e.g., app onboarding, in-app and push messages)

- eCommerce and checkout page (e.g., product page, pricing details/shipping, shopping cart)

Setting Up A/B Test Campaign For Marketing Ads

Developing successful test variations is a process that involves planning and strategic thought. It begins with determining your primary goal, whether it’s improving click-through rate, reducing bounce rate, or increasing user engagement on your product page.

Begin by choosing one factor at a time to test. This targeted strategy minimizes statistical noise and leads to straightforward testing results. Typical elements might be different ad copy, alternate calls to action, or similar product or service offerings. The sample size should be large enough to provide data that will be statistically meaningful in identifying relevant user behavior trends.

During the setup of a split testing campaign, ensure that both versions are shown to similar target audiences under the same conditions. This gets rid of extraneous variables that may taint the test. The duration should be long enough to counteract weekly variations in user experience and short enough so as not to be affected by seasonal changes, which may affect marketing campaigns.

The Impact Of A/B Testing Strategies On Marketing Campaigns

A/B testing plays a crucial role in the success of digital marketing campaigns. By comparing two different versions of a campaign element, whether it’s an ad copy, CTA button, landing page design, or product page layout, marketers can learn exactly what drives better performance.

Instead of relying on guesswork, you get real user data to support your decisions. This helps you understand how different variations impact user behavior, so you can make smarter adjustments. For instance, changing just one headline could generate more clicks for your ad, and repositioning the CTA button can lower the bounce rate.

Here’s how A/B testing boosts marketing performance:

1. Improves Click-Through Rate (CTR)

The click-through rate is an essential metric for gauging how compelling your marketing message is. With the help of A/B testing, one can check which version of copy, headline, or visual element catches the attention of the intended audience and motivates them to act.

For example, changing the CTA from “Learn More” to “Get Your Free Trial” could really boost up the CTR. At the same time, changing the tone to something more urgent or benefit-driven will also alter the response by users. Perhaps even small visual details, such as button color or placement, can make a huge difference in performance.

By consistently testing these elements, marketers can identify patterns in what motivates their target audience to click, which in turn increases traffic to landing pages and product pages.

2. Reduces Bounce Rate

A high bounce rate can often indicate that the expectations of the user were not met after clicking. A/B testing lets you test out different page elements, such as headlines, images, form length, or navigation, to see which one increases the time people spend and the number of pages they view on your site.

For instance, if version A of your landing page contains a dense body of text, while version B includes the use of bullet points and visuals, it might be that version B causes more people to scroll down or click on internal links. That’s a strong indication that your page is now aligned with the user’s expectations.

When users find content that speaks to them, they are more likely to stay, engage, and eventually convert. A/B testing empowers marketers to reduce bounce rates by creating more relevant and user-friendly experiences.

3. Enhances User Engagement

User engagement is defined as how actively and meaningfully your audience engages with your content. It also includes actions such as clicking through pages, spending time on sites, viewing videos, and filling out forms.

Split tests reveal which representation, copy, or interactive feature yields greater engagement. You may test, for example, whether a video explainer keeps users longer on a landing page than a static image or whether a carousel of testimonials is more effective than just one review.

With these insights, it becomes easy to create user experiences that feel intuitive and enjoyable. The more engaged the customer, the more trust they develop in your brand. This, in turn, paves the way for conversions, referrals, and long-term loyalty.

4. Maximizes Return on Investment (ROI)

Every marketing campaign requires some resources like budget, time, and effort. By A/B testing, however, you will be able to ensure optimal utilization of those resources. By choosing the variations that perform well and eliminating those that don’t, you ensure fewer expenses on the wrong marketing efforts and higher returns on what works.

Instead of multiple campaigns blindly executed, hoping one of them pans out, the advertiser places their spend on proven activities. A/B testing will increase efficiency in managing Google Ads, email flows, or social campaigns.

For instance, if version A of your ad has a cost-per-click (CPC) of ₹12 and version B has a CPC of ₹7 but converts more, the decision becomes obvious. Multiply this impact across your campaign elements, and you get significant long-term savings and better returns.

5. Identifies What Resonates With Your Audience

A/B testing isn’t just about tuning performance; it’s also a very powerful tool for audience research. Watching the reactions of your target audience to the various test options gives you insight into their likes, motivators, and behaviors.

You might find out that your audience prefers direct language over flowery text or that a green CTA button builds more trust than a red one. You might similarly discover that placing testimonials closer to the CTA improves conversion or that offering a 14-day free trial generates more sign-ups than a discount.

Small and minor findings collectively become a knowledge base about your audience, enabling you to create marketing campaigns that are more personalized, relevant, and rewarding for them. It also works toward building strong emotional connections between you and your customers.

6. Creates a Culture of Continuous Optimization

Perhaps the most potent influence of A/B testing is the mindset of continuous optimization. Every campaign becomes a chance to learn something new and therefore evolve. Your team begins embracing the concept of experimentation and looks to use data to guide their decisions.

Instead of constraining each campaign to a one-time exercise, you start to view it as just a part of an ongoing process: launch, test, analyze, optimize, and repeat. This process makes better planning go hand in hand with more insightful creative decisions, resulting in accelerated growth.

Regardless of whether you’re a budding small startup or a well-established enterprise, developing a culture around A/B testing keeps you flexible, agile, and competitive in a fast-moving and ever-changing digital realm.

These small improvements add up over time to make big gains. So whether you’re running Google Ads, sending out emails, or working on your website, A/B testing will provide a pathway to the optimization of every stage in your marketing funnel. When applied consistently and effectively, it transforms every race into a learning experience, one that gets you closer to your performance targets.

Key A/B Testing Strategy Frameworks

An A/B test strategy needs to be planned in a clear and concrete manner. A framework ensures that every test you carry out gives useful information and leans towards practical results. Here are the major strategies and common frameworks that you can utilize to test wisely:

Basic Best Practices for A/B Testing Strategies

Before jumping into a framework, consider the following basic A/B testing strategies in your framework:

- Set One Clear Goal: Focus each test on a single objective: click-through rate, bounce rate, or sign-ups. This way, results remain accurate and actionable.

- Only Test One Element at a Time: Testing should be conducted with a single variable, such as the headline, CTA, image, layout, or color of the button. This way, it will be clear what affected the performance.

- Choose a Large Sample Size: Ensures you made tests with enough users so that the statistics are reliable. By testing with only a few people, results might be misleading.

- Use Split Testing Tools: Tools like Google Optimize, Optimizely, or VWO help you divide your audience and track how each variation performs.

- Run the Test Long Enough: Give your test time to gather data. Ending it too soon can lead to wrong conclusions.

Popular Frameworks to Guide A/B Testing Strategies

To take your A/B testing strategies to the next level, these proven frameworks can help you plan smarter experiments and get meaningful results.

1. The LIFT Framework

The LIFT Model considers six factors, such as value proposition, relevance, clarity, distraction, urgency, and anxiety, that directly influence user behavior; they help you understand your page from a free-rider visitor standpoint.

For example, you evaluate if your messaging accurately articulates the value of your offer, if the content is aligned with what the user is looking for, or if the layout appropriately directs attention toward the main call-to-action away from distractions. It encourages creating an urgency factor to reduce anxiety by resolving the user’s objections.

2. The PIE Framework

The PIE Framework could land you an excellent time if you have several potential A/B testing ideas and want to prioritize them. It looks at candidate tests for their ability to positively impact the page’s performance, their ease of implementation, and the importance of the page or feature under test with respect to your business goals. Focusing on high-impact experiments that are easy to implement allows this framework to help teams spend time and resources more effectively.

3. The ICE Framework

The ICE Framework works somewhat in the same way as PIE, but with higher weightage given to confidence in your idea. It looks at each test based on its potential impact, your confidence in it being successful, and the ease with which it can be accomplished. This framework is particularly useful for lean teams or when you don’t know which ideas will work, so that you can be encouraged to focus on what is both promising and feasible.

4. The AARRR Framework

The AARRR Framework divides the hardcore customer journey into five stages: acquisition, activation, retention, revenue, and referral. This way, you ensure that the A/B testing strategies extend well beyond the homepage or advertisements, contributing to the whole funnel.

So, you could test landing pages to enhance user finding (acquisition), improve sign-up flow for better engagement (activation), run retention experiments on email sequences (retention), test pricing or upsell for his income (revenue), and experiment with incentives to encourage users to refer others (referral).

Together, these frameworks lay a foundation strong enough for targeted data-driven A/B testing strategies that can improve performance across your entire marketing funnel.

Major Challenges Performance Marketers Face In A/B Testing

A/B-testing strategies hold a place of importance in marketing performance assessment and enhancement. These operations are commonly complicated. If not handled properly, these issues can lead to misleading results and wasted resources. Here’s a look at the most common problems marketers face while running A/B tests.

1. Insufficient Traffic Leading to Unreliable Test Results

A/B testing depends heavily on traffic from websites. It is usual that a lower number of visits to your site means that your test results may not be statistically significant, and in numerous circumstances, they could be incorrect or not representative of how your audience truly behaves. Low traffic slows down releasing action, one insight, and delays decision-making. For accurate A/B testing, marketers need to ensure a decent sample size and allow the test to run long enough to gather reliable data.

2. Multiple Variable Testing

One of the biggest mistakes in A/B testing is changing multiple elements at the same time, like headlines, button colors, images, and layout, all in one go. Testing too many variables together creates confusion surrounding which elements are actually causing performance uplift. This creates confusion and invalidates your results. It’s always best to test one element at a time (a practice known as isolated testing) to identify what truly works.

3. Short Testing Period Misrepresents User Behavior

Ending your A/B test too early can result in inappropriate conclusions. User behaviors vary on a day-to-day basis, during a time of day, or maybe on special events like holidays. Testing for a couple of days could result in missing user behavior on how various segments interact with your content. Thus, performance marketers must wait for a suitable duration, best with one or two full business cycles, to analyze a change in performance.

4. Bias in Sample Groups Affecting Outcomes

The whole idea of an A/B test is to arbitrarily split the audience evenly. However, if your groups are not well-balanced, then your test results can be biased. For instance, if one group contains mostly returning visitors and the other has mostly new users, that is a very severe bias. Such bias results in wrong insights and poor marketing decisions. Marketers need to employ segmentation methods to ensure that both groups are equally representative of the total audience.

5. Improper Goal Setting or Undefined Success Metrics

Without a defined target, an A/B test is meaningless. Many marketers dive straight into testing without even knowing what success looks like. Are you trying to optimize your conversion rates, click-through rate (CTR), time on page, or revenue? If you didn’t establish good performance metrics from the outset, you won’t know how to evaluate the test’s success. Begin with your goal and KPIs (key performance indicators) in mind. An A/B test should never be launched without a very clear goal and set of KPIs aligned with broader marketing objectives.

By understanding and addressing these challenges, performance marketers can improve the accuracy of their A/B testing strategies and make data-driven decisions that truly enhance campaign performance.

How To Measure The Impact Of A/B Testing Strategies?

Running A/B tests is only the first step. To know if your efforts are actually working, you must measure the results correctly. Tracking the right performance metrics helps you understand whether your A/B testing strategies are making a real difference to your marketing goals.

Here are the main methods to assess the success of your A/B tests:

1. Check Conversion Rates Before and After the Test

One of the most critical A/B test measures of success is the conversion rate. It doesn’t matter if it’s a landing page, ad copy, or call-to-action button; compare the conversion rate before and after the test. If the variation does result in a clear success, then you know that your A/B testing strategy has performed well.

2. Track the Click-Through Rate (CTR)

For instance, if you are testing the CTA button, headlines, or creatives in ads, you have to keep track of the click-through rate (CTR). A test variation with a higher CTR indicates that more users are clicking through to your message. This tells you whether your change has made your content more attractive or effective.

3. Monitor Your Review Bounce Rate to Measure User Engagement

When users visit your site and then leave it without taking any action, this reflects in your bounce rate. A/B testing methods can reduce this by finding more optimal layouts, messaging, or experiences. Test the two copies and compare bounce rates. A lower bounce rate indicates that users are spending more time and engaging more.

4. Compare Revenue or Lead Generation Results

In the case of e-commerce or lead-based businesses, the end goal is typically to drive sales or acquire leads. Measure how much revenue or how many leads you got from each variant. By testing and iterating on new ideas and possibilities, you should see a positive impact for the bottom line.

5. Ensure Your Data Is Statistically Significant

You can’t go on early returns or gut instinct. Also, apply statistical analysis to ensure that the test results can be trusted. This will be a small bit of help to make sure the improvement was not purely a coincidence. You can use tools such as Google Optimize or VWO to validate if your A/B test results have statistical significance.

What Makes A Successful A/B Test?

A successful A/B test doesn’t occur by accident. It is about thinking and planning and executing, and measuring. Whether you’re A/B testing a headline, CTA button, or an entire landing page, these basics will help make certain that your A/B testing campaigns are bringing real, measurable results.

1. Clear, Measurable Objectives

Start with a clear goal. “ So you ask yourself, what do I want to get better at? Whether it’s raising click-through rates, lowering bounce rates, or growing conversions. Your goal should be precise and quantifiable. For example, “Increase sign-ups by 15%” is a stronger A/B testing goal than just “Improve performance.”

2. Focused Variable(s): One Variable at a Time

Try not to test too many things at once, so avoid testing several items. For instance, changing your CTA, your headline, and your image all at once makes it impossible to know which change caused the action. Try and keep to one variable per test. This way, your results will be clean, and you can accurately deduce from your A/B testing strategy.

3. Clear Target Audience Division

Split your audience into two random and equal groups: Group A (Control) and Group B (Variant). Splitting of the audience should be unbiased and should represent the entire audience. A well-selected population prevents any imbalances in results and makes sure your A/B test yields reliable insights into the behavior of the users.

4. Tool for Split Testing Success

The better the tool, the smoother the process and the more accurate the data. With Google Optimize, Optimizely, VWO, or Adobe Target, you can set up your test, track the user behavior, and measure the performance all automatically. Using proper A/B testing tools, your testing becomes more accurate and free from manual errors.

5. Timely Data Analysis and Implementation

Then, once the test is run for a duration sufficient to collect statistically significant data, don’t stall the analysis. Take a look at the data right away and apply the winning variation. The sooner you take action based on your data-driven insights, the sooner you will see improvements in your digital performance.

Conclusion

The success of data-driven digital marketing relies on A/B test strategies. Through tested provocations related to ad copy, landing pages, and calls to action, marketers gain a deeper understanding of user habits and desires.

Best practices of testing include keeping statistical significance in mind while testing changes that matter and could affect the user for better or for worse. Whether it is for conversion rate optimization, CTR optimization, or bounce rate reduction, long-term testing provides an edge over your competitors.

Don’t forget: A/B testing is not about doing it once but about continuously optimizing. Each test tells you a little more about future marketing and about your customers. The companies that commit to regular testing and optimization consistently outperform those that rely on assumptions about what works.

Begin with easy A/B testing tactics and tackle just one variable at a time, then develop into more complex testing programs as you grow. The good investment in real A/B testing is repaid by better campaign results and a knowledge of your customers.

For those companies that want to execute a full A/B testing and optimization program, working with an agency will help to drive results quickly. BrandLoom, India’s leading performance marketing agency, focuses on building a data-driven testing framework that provides data for teams to work on and a framework to show ROI & overall campaign improvement to move to the next stage.

Frequently Asked Questions

A/B testing involves presenting your audience with two slightly different versions of the same message to determine which one gets more positive responses. A/B testing digital marketing is all about comparing two variations of ads, landing pages, or headlines. This is about using customer responses to find the right method.

Half your audience sees one, the other half sees the other, and you watch the results roll in. Which one gets more clicks? More sign-ups? That’s your winner. Instead of relying on guesswork, you let real people and real data guide you toward what actually works. BrandLoom, the best performance marketing agency in India, can provide you with the most effective A/B testing strategies.

A/B testing strategies help to understand the preference of your target audience, without interrupting them. Here, the marketers stop the guesswork and start with what is practical. They try to assess the preference of the audience through efficient landing pages, emails, etc. These strategies help them to get a comprehensive idea about what might work for their brand growth.

Whether your brand needs a catchier headline, a shorter sign-up form, or a clearer CTA button, A/B testing strategies are key to discovering these answers. Once you know about the shortfall, there is no turning back. With the passing time, these small changes lead to significant improvements. Be it increased sign-ups or higher sales, A/B testing marketing can work wonders for your brand. It’s not about being perfect right away; it’s about listening, learning, and getting better with every test.

A/B testing best practices revolve around small changes that can make a huge difference. In these campaigns, the marketers try to understand what makes people click. The messaging is divided into parts and is analyzed to locate the gaps. Even small things, such as the headline text or CTA background color, can influence the response you get from the audience. It is like learning about the preferences of your target market directly from them. However, they do not know that they are part of an A/B testing process, which enables honest responses over biased ones.

At times, tiny steps like tweaking the CTA button can add great value to the campaign. As you involve people, you get realistic results. With time, this information enables marketers to create campaigns that allow the target audience to connect on a deeper level. It ends up boosting conversions.

A/B testing for PPC campaigns must have a statistical ground to prove that the results are more than just wild assumptions. In other words, it helps you know if the winning version truly outperformed the other or if the difference is caused by a mere hunch. You don’t need to crunch the numbers yourself most A/B testing tools will tell you if your results are significant. They look at how many people saw each version, how many took action, and whether the difference is strong enough to trust. If your test ran long enough and had enough people involved, and one version clearly performed better, then you are likely looking at a result you can feel confident about. It is all about knowing when it’s safe to make a change based on real audience behavior.

The best A/B testing tools are the ones that align with your campaign-related operations naturally. As for Google or Facebook ads A/B testing, the built-in tools in these platforms enable marketers to compare different versions without extensive setup. For landing pages or websites, tools like VWO, Optimizely, etc. can help. You do not need to learn coding. While beginners can start with Google Optimize, Optimizely is apt for complicated systems.

If you’re working with emails, platforms like Mailchimp or Klaviyo let you test subject lines or content with just a few clicks. The great thing is, most of these tools do the hard work by splitting your audience, tracking results, and telling you when one version clearly performs better. It is as simple as that. One does not need to learn data science to run a smart test. They just need the right tool that makes testing feel simple and natural.

Running A/B tests with artificial intelligence can take the load off the marketers. While it may take some time for the results of manual testing to come, AI can accelerate the process. Which image appeals to the customers more, or what position of CTA drives more conversions, AI promptly covers all. Some smart tools even automate the process, sending the traffic to the better version. It saves you time to monitor these.

The tool acts like a virtual assistant for the performance marketers who track the progress, read the output, and shift the flow towards the well-performing version. AI does not replace you; it simplifies your task, saves your time, and uses the results to optimize the campaign. BrandLoom, India’s leading performance marketing agency, can create and optimize your campaigns to ensure the highest outcome.

It depends on the requirements of the performance marketers. A/B testing is simple to set up and works for low-traffic sites. It requires fewer samples and gives faster results. In the meantime, multivariate testing looks at several changes at once to see how different elements work together. It works better for advanced outcomes and is good for high-traffic sites. The results may take longer to generate.

So, if you are a beginner and have a low sample, A/B testing is for you. However, if you have a larger audience and want to explore your user experience research at the next level, opt for multivariate testing. Also, you have to be patient, as the results may take months.

The duration of an A/B test depends on your traffic volume and how quickly you receive conversions, but most reliable tests usually run for at least 7–14 days. This timeframe captures normal variations in user behavior across weekdays and weekends. The real goal is to reach statistical significance, meaning the results are unlikely to be due to chance. Ending a test too early can give misleading results, while running it for too long can waste budget without adding value. Marketers typically monitor performance during the test but avoid making changes midway. Letting the test run its course ensures you gather stable, dependable data before choosing a winning variation.

Yes, A/B testing remains relevant even as AI becomes more common in marketing. AI does not replace A/B testing; instead, it supports it by helping marketers analyze data faster and spot patterns more efficiently. AI can automate tasks like audience segmentation or performance predictions, but A/B testing still provides a controlled way to compare versions and validate decisions. Human judgment is still essential when choosing what to test and interpreting results in context. AI improves the testing process, but the basic idea of showing two versions and measuring which performs better remains important. Together, AI and A/B testing create a more accurate, practical approach to improving campaigns.